Vibe Shifts

How growing skepticism of AI can ripple into markets, and why I’m still optimistic

The economy runs on vibes. In the last couple weeks, there seems to be a vibe shift in the world of AI. Even if it wasn’t a seismic shock, the ripples are being felt and talked about across the tech world.

Let’s start with GPT-5. It’s been a bit over a week since OpenAI rolled out their latest model. ChatGPT’s nearly one billion users were told they were going to get a single model that provided a unified AI experience rather than needing to toggle an alphabet soup of different model names in the ChatGPT menu. Fewer hallucinations, better intelligence.

Instead, people were underwhelmed. Everyone from Bloomberg to the TBPN guys to YouTubers gave it a shoulder shrug. Reddit was filled with threads of people complaining about OpenAI removing 4o, which people felt was a warmer, friendlier model than GPT-5. Then OpenAI quickly put 4o back into the menu of model options.

For all of the disappointment though, GPT-5 actually was impressive - particularly the router that chooses which AI model to use to answer the user’s question. As SemiAnalysis put it, the router is the release.

Looking at the bigger picture, the fact that we even have AI this advanced is incredible. I am firmly in the camp that even if AI progress halted with current models, society would have years of innovation and productivity gains as a result of embedding intelligence in every piece of software.

But real or not - society’s perception that we are shifting from step-function improvements in model quality/performance, like the jump from GPT-3 to GPT-4, to incremental improvements should be concerning to anyone who works in tech.

Why should it be concerning? Because we are in an AI-fueled bubble. And as is the case with all bubbles, the AI bubble is not just confined to the asset experiencing inflating valuations.

The stock market, consumer sentiment, and the broader economy are also being impacted by the AI bubble. As George Soros explains in the Alchemy of Finance with his reflexivity theory, stock prices impact consumer sentiment, which impacts economic fundamentals, which impact stock prices. It’s a reflexive loop.

When one piece of the loop breaks, it can cause a chain reaction. Reflexive on the way up, and the way down. What starts with a vibe shift can lead to an actual recession.

Which brings us back to AI this week.

The Code-Gen Come-Down

The growing chorus of AI criticism has not just been confined to OpenAI and GPT-5, but also Cursor.

Cursor is a tool used by developers to accelerate code generation, and is one of the leading AI-native application startups. Outside of the model companies, Cursor is arguably the most highly regarded startup of the AI cycle. Cursor and their competitors have seen revenue growth that is unparalleled in the startup world, with several surpassing $100M in ARR in just a few quarters.

Code-gen is the first killer application of AI.

The issue is OpenAI’s models power Cursor’s product, which results in Cursor paying high fees to OpenAI - so high that Cursor reportedly has negative gross margins. In other words, it costs Cursor more to provide their application to their customers than their customers pay for it. It’s like selling a dollar for 90 cents.

Over the last couple weeks, the code-gen startups that were previously AI darlings have come under intense scrutiny. Their gross margins and business structure have been loudly criticized - with some good humor. Chris Paik, a venture capitalist, even went so far as to say Cursor doesn’t have product-market-fit. Tell that to its investors that recently valued it at nearly $10B.

Culture Shock

The criticism of AI is beyond business models though. It has become cultural, both inside and outside of the tech industry.

A new phrase that is gaining popularity is “being oneshotted”. Technically, one-shot learning is an approach to training AI that enables models to learn based only on a single example.

“Being oneshotted” is now a phrase that describes what happens to people who talk to AI like it’s a person, or use it for their writing or thinking. They start to sound like ChatGPT.

An X account that goes by “spec” has garnered 30k+ followers for calling out famous people who seem to have been Oneshotted. The list includes Joe Rogan, the All-In guys, Travis Kalanick, the White House’s X account, Paul Graham, and many others.

I know this might seem like a niche tech joke.

But John Oliver just had an entire 30-minute segment on his show dedicated to AI slop and how it’s being used by social media companies to increase user engagement.

Nearly 3-years into the AI era kicked off by ChatGPT’s release at the end of 2022, society is wrestling with the impacts that AI’s adoption is starting to have on it.

Just like social media, which promised to bring people together, had the unintended consequence of deepening societal divisions, perhaps AI, which promised intelligence, is actually making us dumber.

Okay - that is too extreme.

But skill atrophy as a result of overuse of AI is a legitimate concern that has become mainstream. New academic studies have shown mixed results on how AI can negatively impact developer productivity and on students learning in schools. In an article titled AI’s great brain robbery — and how universities can fight back, prominent historian and academic Niall Ferguson didn’t even question these negative impacts from AI, but rather what society should do about it.

I believe all new technologies cause growing pains for societies that need to change as they get used to a new technology’s impact. Even an invention as seemingly simple as the bicycle led to a moral panic in Victorian society.

And in case I haven’t made it clear, I am very optimistic about AI’s impact on individuals and society. I’m aligned with Yann LeCun, Meta’s Chief AI Scientist, who said, “Amplifying human intelligence is the best thing we can do for humanity. It might have an effect on society similar to the appearance of the printing press in the 15th century... So maybe this is a new Renaissance we're going to see.”

But I am concerned about the vibe shift taking place with AI right now.

The scariest thing is actually much simpler than these philosophical debates over AI’s role in society - it’s OpenAI.

If OpenAI sneezes…

OpenAI is the barometer for the AI bubble that the economy is riding on right now.

Sam Altman talks about how it’s important that OpenAI stays a private company for now. He says how hard it would be for OpenAI to have their stock publicly listed, implying the volatility in the stock would be a distraction for the company and dampen their ability to build for the long-term.

The fact is OpenAI is under a microscope, so much so that it amounts to the same result as them being public. Let me explain.

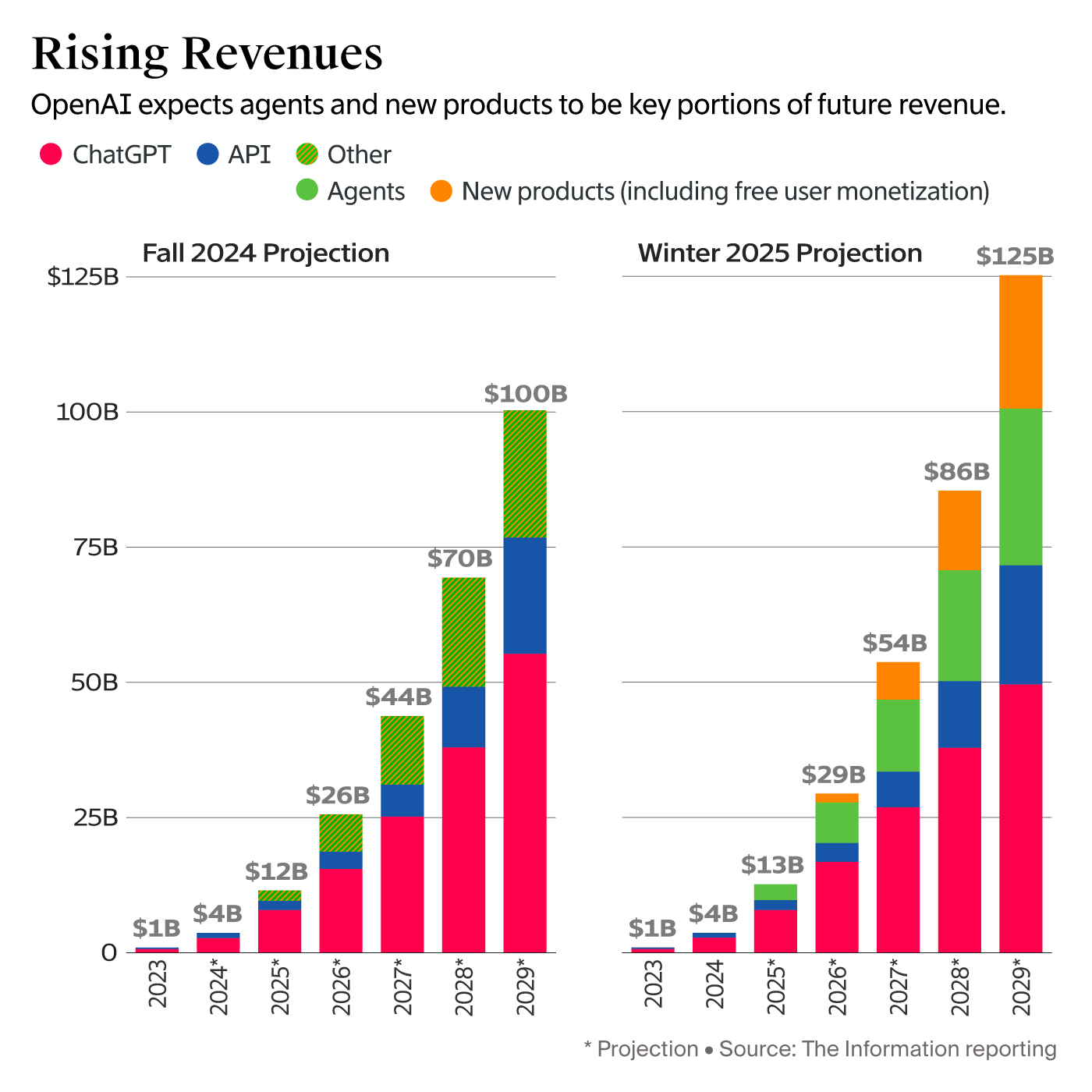

OpenAI is part of a small cohort of privately-held companies (including Canva, Databricks, SpaceX, and others) whose shares are regularly exchanged in the form of secondary sales among private investors. The Information is writing about OpenAI’s revenue results on a nearly quarterly basis now, including two weeks ago when they reported OpenAI is at a $12B revenue run-rate and on track to beat its plan for $12.7B in revenue by the end of this year.

So the distinction between private and public - that a company’s financials are not disclosed publicly and its shares are not freely tradable - don’t exactly apply to OpenAI (**before any of my finance friends come at me - yes I’m aware the company still needs to sign off on share approvals and we have limited financials from them - you get my point).

If OpenAI underperforms expectations on revenue growth, or reduces those revenue projections, there is no doubt that will be a big news story. If the miss is bad enough, it could impact the valuation investors are assigning to the company and potentially their willingness to fund the “trillions of dollars” that Sam Altman wants OpenAI to spend on AI infrastructure.

Why? Because the implication of OpenAI slowing revenue growth would be that AI is less economically valuable than expected - either to consumers, businesses, or both.

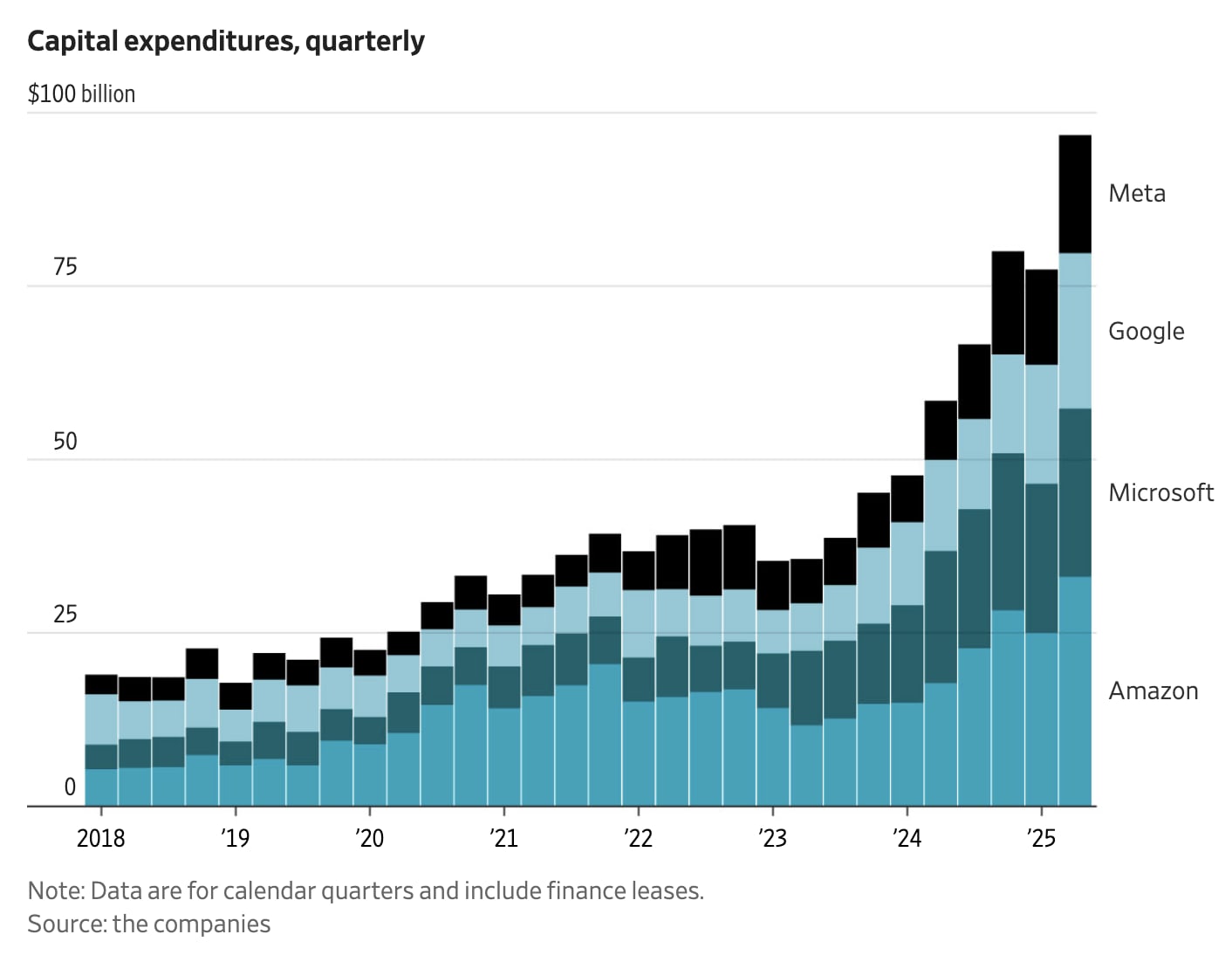

That has risks to every major tech company - Meta, Google, Amazon, Tesla, etc. - which are telling investors about how the return on investment from AI justifies large volumes of capex spend.

Investors have looked past these companies spending hundreds of billions of dollars on data centers and AI infrastructure because they believe there is a pot of gold on the other side of the rainbow.

If that belief in the ROI of AI changes, these companies will come under massive pressure to cut capital expenditures or watch their stocks drop.

And if the mega-cap tech companies cut capex, who stands to lose the most? Nvidia, the most highly valued company in the world, which now accounts for 8% of the value in the S&P500 - a record high for the index since its launch in 1981. Nvidia is leading the stock market’s rally, and as its share price goes, so goes the stock market.

Let’s take a step back from forecasting an impending stock market crash. Do I really think the last few weeks’ vibe shift is indicative of AI actually being less economically productive than people realize, and therefore signaling an impending AI bust and recession? No, definitely not.

We are so early

We are still so early in rolling out AI across the economy. It is like society is in 1900 - the light bulb was invented 20 years earlier but widespread adoption is complex and expensive, so cities are being lit by kerosene lamps. Today, society is powered by software and digital innovation across not just technology but every sector - healthcare, manufacturing, transportation, energy, finance - yet the vast majority of that software is not intelligent. Kerosene lamps.

There are endless opportunities to upgrade, replace, and entirely rethink how old software can be deployed across the economy with AI-powered software.

Enterprise use cases have largely concentrated on code-gen, and yes for now those companies have questionable unit economics - but they are young companies, have large amounts of capital on their balance sheets, huge user bases, talented teams, and I believe a lot more innovation to come (and better unit economics). Beyond code-gen, there are thousands of startups being launched seeking to provide businesses with AI solutions to make them more efficient and productive.

On the consumer side, as many people including Yoni Rechtman, a venture capitalist, have pointed out - so many consumer applications from cookbooks and travel guides, to fitness applications and self-help programs - will be recreated with AI-native applications.

Below the application layer of specific use cases, we are still benefitting from innovation at the hardware layer.

Nvidia’s most advanced chips, the Blackwell line, are just starting to come online. Early benchmarks show big gains in training, which looks promising for when they are used to train a future flagship LLM like GPT-6.

Watch the vibes

So GPT-5 was a bit underwhelming. AI has shifted from novelty to mass adoption, and enough time has gone by that society is starting to feel its impact - both positively (innovation) and negatively (AI slop and skill atrophy). And as the dog days of summer 2025 wind down, it feels like there’s been a vibe shift in AI.

The good news is OpenAI, Anthropic, and the big tech companies are seeing incredible revenue growth that so far has outpaced everyone’s expectations. Enterprises are very early in embedding AI across their organizations to improve efficiency, and consequently profitability. Demand for GPU’s continues to far outstrip supply. Hardware innovation is continuing apace. There is a long runway of application innovation even on today’s models. AI will be used to make us healthier, wealthier, and - yes - more intelligent.

And we humans always overestimate technology’s impact in the short-term, and underestimate it in the long-term.

So there are many more reasons to be positive than not.

The future is bright but the path will be bumpy. Watch the vibes.